HumorBench

Research Preview

Early insights shared publicly as we work toward a humor benchmark for speech models

Overview

You’re probably wondering, who is this team, and why do they care how funny speech models are? Please indulge a brief preamble.

We are a group of researchers, creatives, and technologists mostly in awe of AI, with some notes on its aesthetic taste. The best reasoning models can pass the bar and crack novel math problems, and yet we still don’t find them capable of truly good writing … or humor.

So we’ve embarked on a quest to help models improve their soft skills through Reinforcement Learning Human Feedback (RLHF). It’s complicated work. The mere establishment of ‘ground truth’ on a quality like ‘humor’ is challenging. Our journey thus begins with evals and benchmarks.

We’re excited to publish our first research: ‘HumorBench v0.1’, the beginnings of an effort to measure speech models on humor performance, a particularly challenging task that requires nuance, timing, and contextual awareness.

What we’ve shared is preliminary. Small N, and just hundreds of judgments – it serves as a sort of focus group teeing us up for a much larger and eventually automated benchmark. Despite its limitations, we chose to publish it.

Having collected the opinions of 35 world-class comedy professionals (we’re talking Emmy winners, SNL alums, and Netflix showrunners), we observed a few interesting things:

- We asked our evaluators to rate both humor delivery and “humanness,” and in the end we found that different models came out ahead in each of these categories.

- The top-rated models in both of these categories came from voice-specific AI labs, beating out giant multimodal model players.

- Sarcasm presents a unique path in future evaluations towards building consensus around the “shape” of a joke. When we asked our evaluators to give us their ideal delivery (both in writing and via voice recording), our evaluators aligned more on the emphasis and pitch qualities of certain portions of a sarcastic statement than they did on a longer joke (eg. setup/punchline).

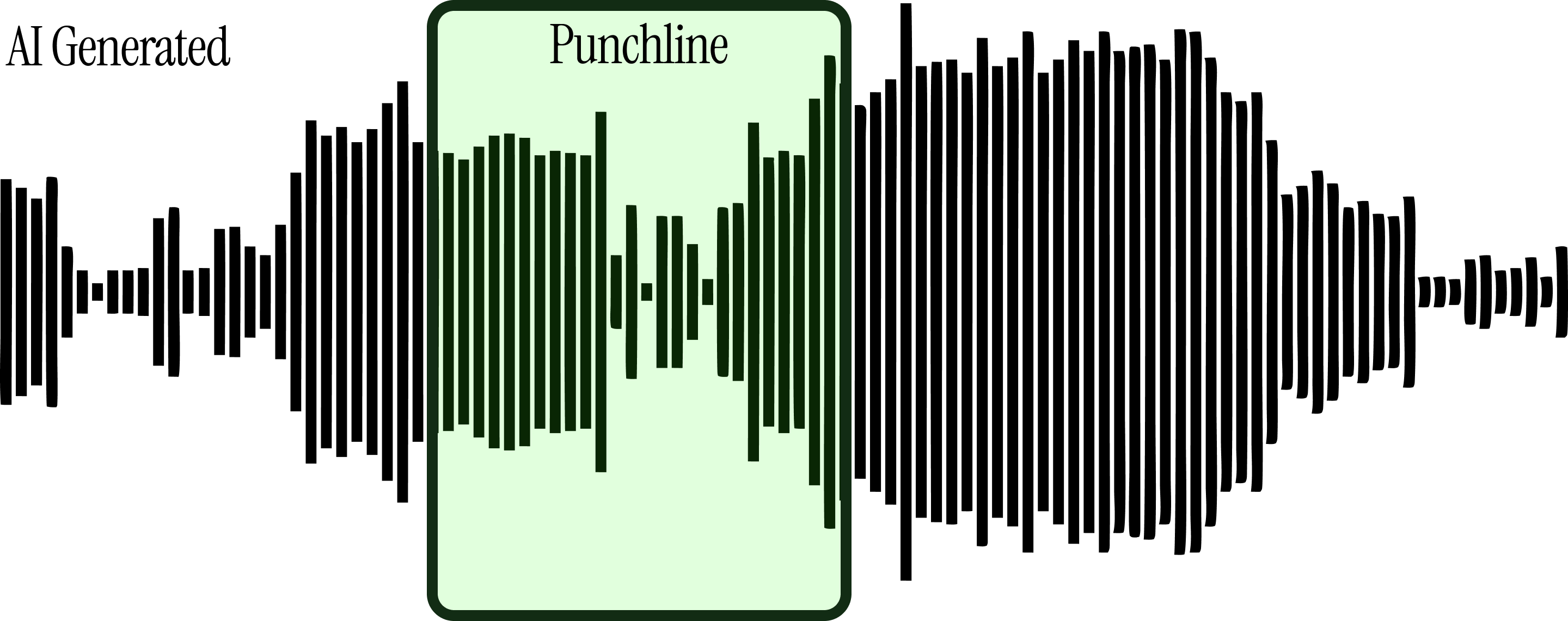

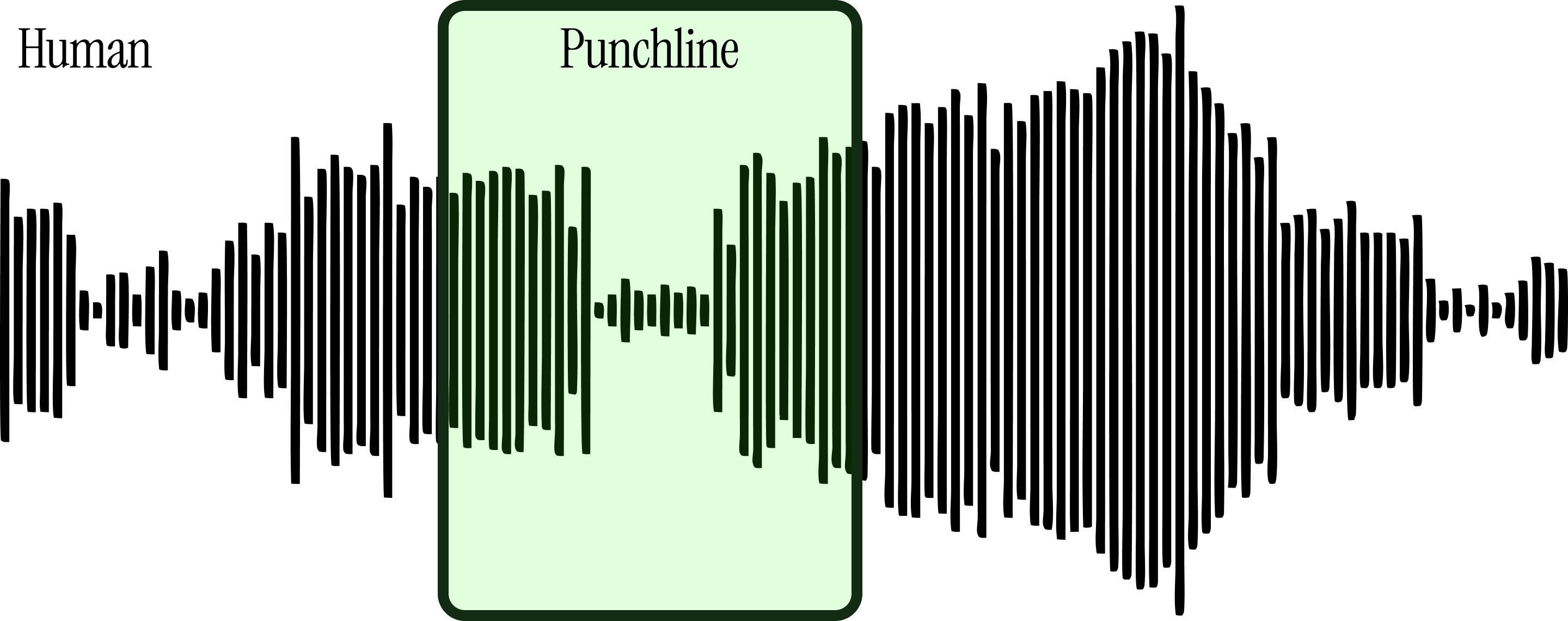

We’re in the midst of analyzing 70 joke samples performed by our comedy experts to establish the identifying traits of great humor performance, and ultimately form the HumorBench ground truth. Through spectrogram analysis, we’ll create a humor performance recipe with features rooted in frequency, pitch, volume, direction, and amplitude.

We hope to pull in many talented contributors from the worlds of AI and comedy. This is the start of a conversation! Please reach out with your thoughts, questions, and feedback.

Request Full Report| Company | Model | Sample |

|

|

|

||

|---|---|---|---|---|---|---|---|

| Hume | Octave TTS |

|

39% | 59% | 28% – 50% | 52% – 65% | Request |

| ElevenLabs | Eleven Turbo v2.5 |

|

23% | 59% | 15% – 34% | 52% – 65% | Request |

| PlayAI | Dialog 1.0 (PlayDialog) |

|

14% | 46% | 8% – 24% | 40% – 52% | Request |

| Chirp3 |

|

10% | 30% | 5% – 19% | 25% – 36% | Request | |

| Murf | Murf Speech Gen 2 |

|

3% | 22% | 1% – 10% | 21% – 32% | Request |

| Cartesia | Sonic 2 |

|

3% | 17% | 1% – 10% | 13% – 22% | Request |

| OpenAI | GPT-4o mini TTS |

|

3% | 13% | 1% – 10% | 9% – 18% | Request |

| Sesame | Sesame CSM (Conversational Speech Model) |

|

3% | 70% | 1% – 10% | 64% – 75% | Request |

| Amazon | Amazon Polly (Generative Engine) |

|

1% | 6% | 0% – 8% | 3% – 9% | Request |

| Microsoft | Azure AI Speech Studio |

|

1% | 26% | 0% – 8% | 15% – 26% | Request |

Spectrogram Analysis (coming soon)

We are in the midst of analyzing 70 samples from our comedy experts to

establish the identifying traits of great humor performance.

We are conducting spectrogram analysis to create a (preliminary) humor

performance recipe with features rooted in frequency, pitch, volume,

direction, and amplitude. These insights will serve as an MVP 'ground

truth', to be validated and carried forward in future analysis.

Methodology

Experts

From a pool of hundreds of applicants, we selected 35 top tier evaluators including Hollywood comedy executives, stand-up comedians, and professional comedy writers.

As part of our vetting process, each potential evaluator went through the following process:

(1) Live interview assessing comedic taste and analysis skills. Applicants were primarily judged on their ability to dissect comedic performance into component parts and calibrate quality.

(2) Small evaluation exercise where potential evaluators were asked to give feedback on two setup-punchline style jokes delivered by different speech models. In rating these responses we looked for strong demonstration of comedy expertise, thoroughness, and any perceived bias.

(3) Resume and professional credential screening. Here, we judged evaluators on pedigree and accomplishments – e.g., have they contributed to high performing teams and productions? Which / how many / how long?

The expert evaluation process was overseen by co-founder and CEO Chris Giliberti, Executive Producer on comedy productions such as ALEX INC, which starred Zach Braff on ABC. We additionally consulted with Jeff Stern, former President of Apatow Productions & producer on comedy productions such as LIVING WITH YOURSELF on Netflix.

In the end, our evaluator pool broke down as follows:

- 65% comedy creative executives (professionals who review and improve the work of comedians in Hollywood), with employers such as Amazon, Netflix, Sony, and Warner Brothers Discovery

- 35% comedy writers, stand up comedians, and comedic actors with writing and performance credits on Paramount, Adult Swim, Comedy Central

Up Next

Armed with learnings from this preliminary research, we are executing a larger research effort with the dual goals of:

- Shipping the voice AI field’s first humor performance benchmark

- Creating a higher confidence leaderboard of the funniest speech models

This round is significantly larger in respondents (N=100+) and judgements (thousands in aggregate.)

We are employing a pairwise ‘arena-style’ evaluation to generate both a fresh leaderboard and ELO-based ranking. Most data, code, and outputs will be published.

To create a production-ready version of HumorBench, we will:

- Run our professional comedian reads by crowdworkers to increase our confidence they are indeed a solid ground truth

- Perform cluster analysis on both comedian and AI samples to understand separability

- Tune a classifier capable of automatically scoring the humor quality of audio samples (or at least their spectrograms), as defined by alignment with ground truth

Key Metrics

Performance Quality

Using a 4-point Likert scale, we asked evaluators to rate individual joke performances. When relevant, we asked for separate ratings for the “setup” and “punchline” portions of a joke to collect more granular quantitative feedback.

Qualitative Feedback

We asked evaluators to leave feedback for each joke performance via short voice notes. We conducted manual and automated sentiment analysis on the voice note transcripts.

“Perceived Human” Output

We asked evaluators to guess whether each performance was AI or human, selecting from the following: Probably AI, Definitely AI, Probably Human, Definitely Human.

“Top Pick” Output

At the end of our survey, evaluators were asked to pick a favorite read from all 10 AI outputs. They were able to relisten to samples as many times as required, with all samples arrayed on the same page. We conducted a simple tally of the evaluators’ favorite AI output across each joke and model.

Limitations

This initial research functioned more as a ‘focus group’. Humor is difficult to measure, so we are working in phases to establish insights and build up to our goal of a truly automated benchmark.

As you interpret our findings, we’d like to acknowledge several limitations:

- Our sample size is relatively small (N=35). In subsequent research, we plan to scale N into the 100s.

- We generated outputs in a single shot, with just two outputs judged per model. Model outputs can vary widely across generations, and even more widely with manipulations to temperature and other settings. In our next round, we will include many more outputs per model, both out of fairness to capture a wider spectrum of quality, and to capture more overall judgments.

- There is an existing body of research on speech model quality which we have not referenced here nor factored into our own analysis. We plan to be more referential in future work.

Contributors

Chris Giliberti - Avail Co-founder & CEO. Former head of TV at Spotify, and management consultant at The Boston Consulting Group (BCG)

Ryan Riebling - Avail Co-founder & Head of Eng. Former Amazon engineering lead

Ryan Shellberg - Avail senior engineer. Former Amazon engineer

Benjamin Genchel - ML Researcher. Former Spotify & Amazon ML engineer

Jeff Stern - Producer. Former head of development, Apatow Productions, and executive producer, Living with Yourself (Netflix)

Study Design

Evaluators rated two jokes each from 10 models. Joke #1 contained a set-up & punchline, and joke #2 was a sarcastic statement. Jokes were chosen for their simplicity of successful delivery: each was hypothesized to only ‘work’ with emphasis or pitch variance placed on specific words. (Our outputs from professional comedians suggested otherwise, which will inform our next round.)

Jokes were analyzed for:

- Quality of set-up delivery (Likert scale)

- Quality of punchline delivery (Likert scale)

- Anthropic quality of the voice (Likert scale)

- Opportunities for delivery improvement (delivered as an unstructured voice note)

- Top pick (select favorite of 10)

To minimize demographic bias, we selected a medium-tenor male voice (chosen to resemble the delivery style of a stereotypical stand-up comic) across all models. Each model had one opportunity to generate the joke. No temperature, pacing, or delivery parameters were adjusted. For models with prompt boxes, we used a consistent instruction: “Stand-up comedian, comedic delivery”

We used a 4-point Likert scale to encourage decisive evaluator feedback (no neutral option). Acknowledging that there is a “ground truth” challenge with humor, we selected jokes that we believed would have clear points of emphasis across professional comedians’ delivery. Additionally, as part of our research, we asked our evaluators (all expert comedy executives, comedians, and comedy writers) to provide their ideal delivery of the test jokes - in both writing and verbally - to help establish ground truth data points.

We additionally included human honeypots for calibration.

Model Configurations

We tested ten speech models, making sure to include top models from industry leaders as well as emerging ones (eg. Sesame, which had a splashy launch earlier this year). Selection was based on a mix of popularity, technical reputation (e.g. preference for foundation models) and market factors. Each model was prompted to perform the same joke using default settings (see “Study Design” below for more). All models tested are proprietary.

| Company | Model | Joke 1 Voice | Joke 2 Voice | Prompt Box |

|---|---|---|---|---|

| AWS | Amazon Polly (Generative Engine) | Stephen | Matthew | |

| Azure | Azure AI Speech Studio | Andrew Multilingual | Andrew Multilingual | |

| Cartesia | Sonic 2 | Harry | Harry | |

| ElevenLabs | Eleven Turbo v2.5 | Mark - Natural Conversations | Brian - Deep and Funny | |

| Chirp3 | Algenib | Orus | ||

| Hume | Octave TTS | no preselected voice, only the prompt “male stand up comedian, comedic delivery” | no preselected voice, only the prompt “male stand up comedian, comedic delivery” | “Male stand up comedian, comedic delivery” |

| Murf | Murf Speech Gen 2 | Miles | Miles | |

| OpenAI | GPT-4o mini TTS | Ash | Onyx | “Stand up comedian, comedic delivery” |

| PlayAI | Dialog 1.0 (PlayDialog) | Dexter | Angelo | |

| Sesame* | Sesame CSM (Conversational Speech Model) fine tuned variant via interactive voice demo on blog | Miles | Miles |